upload to s3 bucket curl php curl_exec x-amz-algorithm

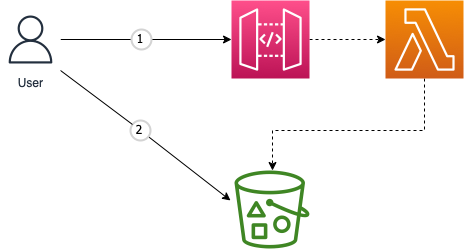

Creating S3 Upload Presigned URL with API Gateway and Lambda

Allow's create an S3 upload presigned URL generator using Lambda fronted by the API gateway.

For a user to upload a file to a specific S3 saucepan, he/she beginning fire a request to the API gateway, the API gateway acceleration the request to the lambda function, which in turn talks to the S3 bucket to get a pre-signed upload URL, this URL is and so returned to the user every bit the response of the API gateway. The user then uploads his/her file to the bucket with the URL.

Terraform is used to reach the above architecture.

Lambda Function

The lambda function is implemented using Golang.

package main import (

"context"

"log"

"bone" "github.com/aws/aws-lambda-go/events"

"github.com/aws/aws-lambda-get/lambda"

"github.com/aws/aws-sdk-go-v2/aws"

"github.com/aws/aws-sdk-go-v2/config"

"github.com/aws/aws-sdk-go-v2/service/s3"

)

func handler(ctx context.Context, request events.APIGatewayProxyRequest) (events.APIGatewayProxyResponse, fault) {

cfg, err := config.LoadDefaultConfig(context.TODO())

if err != nada {

log.Fatalf("Failed to load default config: %five", err)

} client := s3.NewFromConfig(cfg)

psClient := s3.NewPresignClient(client) bucket := bone.Getenv("Bucket")

filename := request.QueryStringParameters["filename"] #log.Printf("bucket: %south, filename: %s", bucket, filename) resp, err := psClient.PresignPutObject(context.TODO(), &s3.PutObjectInput{

Saucepan: aws.String(bucket),

Key: aws.String(filename),

}) if err != nil {

log.Fatalf("Failed to request for presign url: %v", err)

} return events.APIGatewayProxyResponse{Body: resp.URL, StatusCode: 200}, aught

} func main() {

lambda.Start(handler)

}

Notice in the lambda handler, the function parameter is events.APIGatewayProxyRequest. The render type is events.APIGatewayProxyResponse. We can go the filename from the query string and apply information technology as the key for the S3. Become the bucket from the environs variable. We call the S3 API to create a PresignPutObject request. The presigned URL can be obtained from the S3 response.

Now lets create the lambda with Terraform.

resource "aws_lambda_function" "s3-presigned-url" {

function_name = "s3-presigned-url"

filename = "./lambda-code/src/s3-presigned-url.zip"

handler = "s3-presigned-url.bin"

source_code_hash = filebase64sha256("./lambda-code/src/s3-presigned-url.zip") part = aws_iam_role.lambda-s3-role.arn

runtime = "go1.ten" environment {

variables = {

Saucepan = aws_s3_bucket.my-bucket.bucket

}

}

}

The executable file is named s3-presigned-url.bin. The zip file is created with the executable file but.

In the environment section, we ascertain a variable named Saucepan with the value referenced to the S3 terraform resources. (The hcl source of s3 resource is ignored here).

To run the lambda, we need to assign proper IAM roles. This is accomplished with the following,

variable "lambda_assume_role_policy_document" {

type = string

description = "presume part policy certificate"

default = <<-EOF

{

"Version": "2012-10-17",

"Statement": [

{

"Activeness": "sts:AssumeRole",

"Principal": {

"Service": "lambda.amazonaws.com"

},

"Effect": "Let"

}

]

}

EOF

} resources "aws_iam_policy" "my-lambda-iam-policy" {

name = "my-lambda-iam-policy"

path = "/"

description = "My lambda policy - base" policy = <<-EOF

{

"Version": "2012-ten-17",

"Statement": [

{

"Activeness": [

"logs:CreateLogGroup",

"logs:CreateLogStream",

"logs:PutLogEvents"

],

"Event": "Allow",

"Resource": "*"

}

]

}

EOF

} resource "aws_iam_role" "lambda-s3-role" {

name = "my_lambda_iam_s3_role"

assume_role_policy = var.lambda_assume_role_policy_document

} resource "aws_iam_role_policy_attachment" "base of operations-part" {

role = aws_iam_role.lambda-s3-role.proper name

policy_arn = aws_iam_policy.my-lambda-iam-policy.arn

} data "aws_iam_policy" "s3-admin-policy"{

name = "AmazonS3FullAccess"

} resource "aws_iam_role_policy_attachment" "s3-role" {

role = aws_iam_role.lambda-s3-role.proper name

policy_arn = information.aws_iam_policy.s3-admin-policy.arn

}

Starting with a variable defining the presume function policy, we create the aws_iam_role resource. The lambda function needs to access the CloudWatch logs and S3 services. Create a new policy for CloudWatch. Reuse the S3 permission by referencing data resources to the AWS-managed policy. Then phone call the "aws_iam_role_policy_attachment" twice to attach the policies to the role. (Certainly, for production usage, we may want to tighten the S3 permission to s3:putObject only)

API Gateway

The API Gateway is created with api, stage, integration, and route.

resources "aws_apigatewayv2_api" "s3upload" {

name = "s3upload"

protocol_type = "HTTP"

} resource "aws_apigatewayv2_stage" "v1" {

api_id = aws_apigatewayv2_api.s3upload.id

name = "v1"

auto_deploy = true

} resources "aws_apigatewayv2_integration" "s3upload" {

api_id = aws_apigatewayv2_api.s3upload.id

integration_type = "AWS_PROXY" connection_type = "INTERNET"

description = "s3upload presign url"

integration_method = "Mail service"

integration_uri = aws_lambda_function.s3-presigned-url.invoke_arn

} resource "aws_apigatewayv2_route" "s3upload" {

api_id = aws_apigatewayv2_api.s3upload.id

operation_name = "s3upload"

route_key = "GET /url" target="integrations/${aws_apigatewayv2_integration.s3upload.id}"

}

Started by defining an HTTP API gateway, the phase resources will generate an invoke URL base to trigger the API. Nosotros are using "v1" instead of the "$default" hither. The integration specifies how the API gateway forwards the request to the Lambda function. Discover the combination of integration_type and integration_method are AWS_PROXY and POST respectively. It seems the POST is the only method for API Gateway to integrate to the lambda. The route resources connects the API and the integration through the target aspect. The URL path and the HTTP method are divers in the route_key attribute. With these settings, we will expect the full invoke URL and path is something as below,

https://{random string}.execute-api.us-due east-1.amazonaws.com/v1/url

This is non enough yet, we need to let the API gateway to be able to invoke the lambda function. If we are using the AWS web console, at that place is a default setting to enable the permission. In the Terraform case, nosotros enable the permission with the lambda resources policy,

resource "aws_lambda_permission" "apigw-permission" {

statement_id = "AllowAPIInvoke"

action = "lambda:InvokeFunction"

function_name = "s3-presigned-url"

principal = "apigateway.amazonaws.com" # The /*/*/* part allows invocation from any stage, method and resource path

source_arn = "${aws_apigatewayv2_api.s3upload.execution_arn}/*/*/*"

}

Notice we can further tighten the permission for the stage, method, and path combination in the resource_arn attribute.

Employ all the Terraform code. Nosotros tin can get the API gateway invoke URL.

Testing

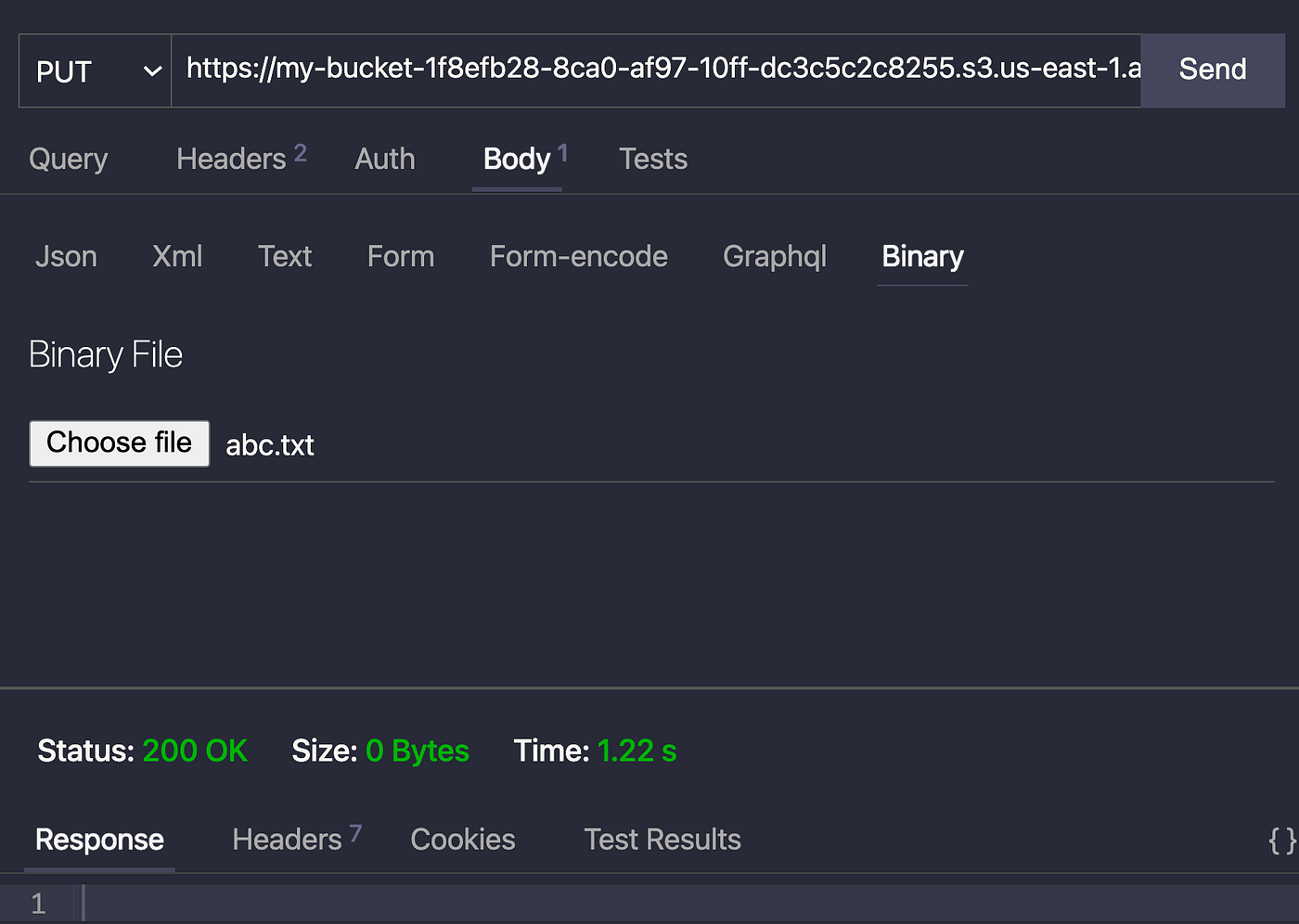

Suppose we want to upload a file, abc.txt, to the S3 bucket.

Run the following curl control to go the pre-signed URL from the API gateway.

curl 'https://o2cfq73ski.execute-api.u.s.-east-1.amazonaws.com/v1/url?filename=abc.txt' The response is the S3 upload presigned URL

https://my-bucket-1f8efb28-8ca0-af97-10ff-dc3c5c2c8255.s3.us-due east-1.amazonaws.com/abc.txt?X-Amz-Algorithm=AWS4-HMAC-SHA256&X-Amz-Credential=ASIA5GCH7TYAE664OJNK%2F20220102%2Fus-east-i%2Fs3%2Faws4_request&10-Amz-Appointment=20220102T075911Z&X-Amz-Expires=900&X-Amz-Security-Token=... Launch the Thunder customer in VS code, create a new PUT request with the URL, select the binary file every bit the body.

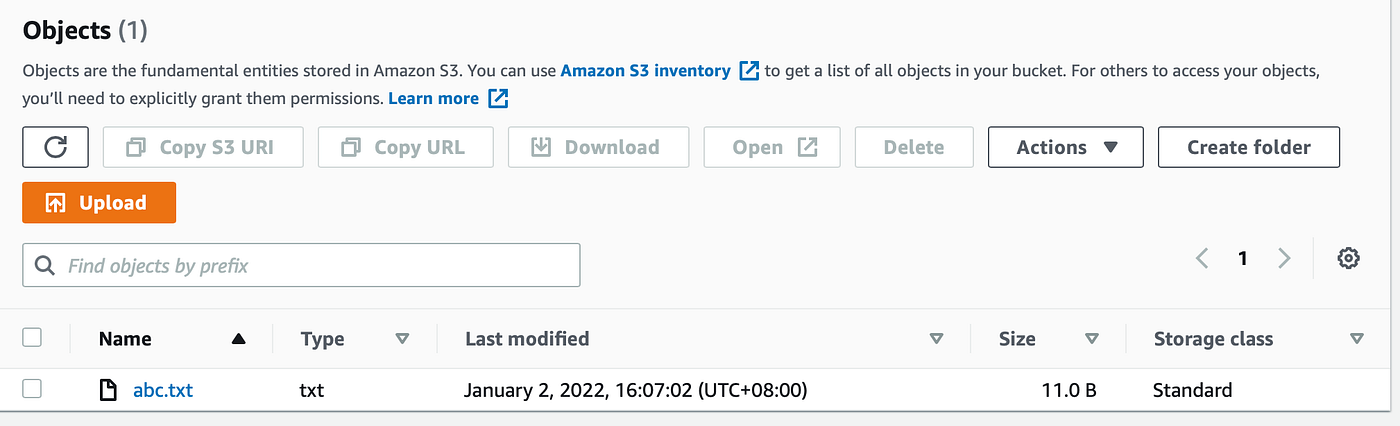

The request is a success. We now come across the file abc.txt is uploaded into the S3 bucket.

Source: https://blog.devgenius.io/creating-s3-upload-presigned-url-with-api-gateway-and-lambda-fd89c08bd1aa

Post a Comment for "upload to s3 bucket curl php curl_exec x-amz-algorithm"